How can we use AI ethically in education?

)

The Holy Grail of EdTech is an AI-driven autonomous system that provides a personalised learning experience with minimal teacher input. This is not surprising given the potential financial rewards that such a system would bring. Education is very big business. According to IBIS Capital [Global EdTech Industry Report 2016], education was $5 trillion industry globally in 2014 and is growing at $600 billion a year. However, only 2% of it is digitised – education as an industry is a ‘late adopter’ at best. It's not surprising that EdTech is increasingly being seen as a ripe market for investors.

Three factors make an AI-driven autonomous system very attractive

- The importance and cost of education to Governments and parents. Governments and parents value education as it is seen to be the key to national and personal prosperity

- the global teacher shortage and the unsustainability of the present model of one teacher to 20-30 students; and

- the abundant scope for new markets (pandemic aside there are currently 263m children in the world not in education).

There is scope here to be the ‘Amazon of Education’ - no wonder the Venture Capitalists are turning their attention to EdTech.

However, we are not talking about the automation of the sale of books here - we are talking about the education of young people and there a range of ethical concerns which need to be addressed at an early stage.

- Skewed Dataset. We are still in the ‘Early Adopter’ phase of the use of Educational AI. It is at present an ‘enhancement’ of existing educational processes and therefore pioneering schools need to be at a relatively high level of digital and pedagogical sophistication to be able to deploy these systems. We need to recognise that these systems are being developed using an unrepresentative dataset which has implications for fairness and wider applicability down the line.

- AI Summative Judgements. At present educational platforms are using AI to make formative judgements on individual students (based on what is likely to be a globally unrepresentative dataset) in order to tailor learning to the needs of the individual student. There is a risk down the line that we may see AI educational systems replace the present public examination system, making summative judgments that have a significant impact on life choices such as access to higher education or certain levels of employment. Furthermore, judgments might be made on wider criteria than presently deployed. For example, rather than just assessing whether or not a student has mastered a particular skill or unit of knowledge, it would be possible to assess how fast a student takes to so.

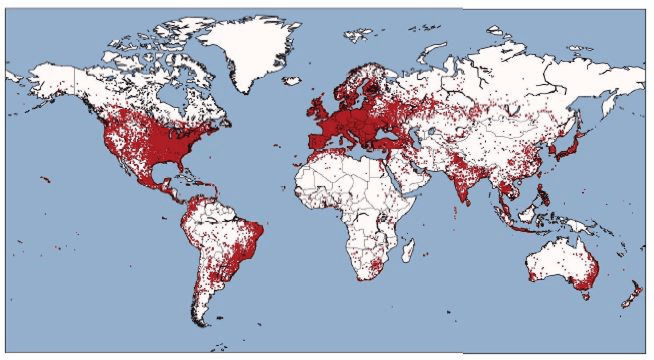

- Digital Divide: As AI becomes more commonplace, there is a danger of an educational ‘digital divide’ between those generally wealthy countries who can afford to deploy AI educational systems and those who do not. (c.f. There were significantly lower participation from Africa in the recent MIT project Awad et al ‘The Moral Machine Experiment’ Nature Vol 563 November 2018).

- Monopoly on Education. It is conceivable that, in time, there will be a few large companies, the 'Amazons of Education' if you like, who would dominate the automated-education industry. These providers would be able to control the content of what is taught (in the way that some Governments around the world do today). It would be possible, for example, for these companies to decide that the Holocaust should not be on the C20 history syllabus (as is the case in many Arab states today); or they might promote certain ideologies or lifestyle choices (vegetarianism, heterosexuality, etc.). We should avoid a world where a minority have a monopoly on education.

It is time to have the moral debate about AI in education.

Mark S. Steed is the Principal and CEO of Kellett School, the British School in Hong Kong; and previously ran schools in Devon, Hertfordshire and Dubai. He tweets @independenthead

)

)

)

)

)

)

)

)

)